As we encounter superior applied sciences like ChatGPT and BERT each day, it’s intriguing to delve into the core know-how driving them – transformers.

This text goals to simplify transformers, explaining what they’re, how they operate, why they matter, and how one can incorporate this machine studying strategy into your advertising efforts.

Whereas different guides on transformers exist, this text focuses on offering an easy abstract of the know-how and highlighting its revolutionary impression.

Understanding transformers and pure language processing (NLP)

Consideration has been some of the essential parts of pure language processing methods. This sentence alone is sort of a mouthful, so let’s unpack it.

Early neural networks for pure language issues used an encoder RNN (recurrent neural community).

The outcomes are despatched to a decoder RNN – the so-called “sequence to sequence” mannequin, which might encode every a part of an enter (turning that enter into numbers) after which decode and switch that into an output.

The final a part of the encoding (i.e., the final “hidden state”) was the context handed alongside to the decoder.

In easy phrases, the encoder would put collectively and create a “context” state from all the encoded elements of the enter and switch that to the decoder, which might pull aside the elements of the context and decode them.

All through processing, the RNNs must replace the hidden states based mostly on the inputs and former inputs. This was fairly computationally advanced and may very well be quite inefficient.

Fashions couldn’t deal with lengthy contexts – and whereas this is a matter to today, beforehand, the textual content size was much more apparent. The introduction of “consideration” allowed the mannequin to concentrate to solely the elements of the enter it deemed related.

Consideration unlocks effectivity

The pivotal paper “Consideration is All You Want,” launched the transformer structure.

This mannequin abandons the recurrence mechanism utilized in RNNs and as an alternative processes enter information in parallel, considerably enhancing effectivity.

Like earlier NLP fashions, it consists of an encoder and a decoder, every comprising a number of layers.

Nonetheless, with transformers, every layer has multi-head self-attention mechanisms and absolutely related feed-forward networks.

The encoder’s self-attention mechanism helps the mannequin weigh the significance of every phrase in a sentence when understanding its that means.

Fake the transformer mannequin is a monster:

The “multi-head self-attention mechanism” is like having a number of units of eyes that concurrently deal with totally different phrases and their connections to know the sentence’s full context higher.

The “absolutely related feed-forward networks” are a collection of filters that assist refine and make clear every phrase’s that means after contemplating the insights from the eye mechanism.

Within the decoder, the eye mechanism assists in specializing in related elements of the enter sequence and the beforehand generated output, which is essential for producing coherent and contextually related translations or textual content generations.

The transformer’s encoder doesn’t simply ship a closing step of encoding to the decoder; it transmits all hidden states and encodings.

This wealthy data permits the decoder to apply consideration extra successfully. It evaluates associations between these states, assigning and amplifying scores essential in every decoding step.

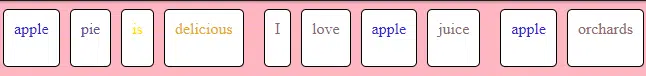

Consideration scores in transformers are calculated utilizing a set of queries, keys and values. Every phrase within the enter sequence is transformed into these three vectors.

The eye rating is computed utilizing a question vector and calculating its dot product with all key vectors.

These scores decide how a lot focus, or “consideration,” every phrase ought to have on different phrases. The scores are then scaled down and handed by means of a softmax operate to get a distribution that sums to at least one.

To stability these consideration scores, transformers make use of the softmax operate, which normalizes these scores to “between zero and one within the optimistic.” This ensures equitable distribution of consideration throughout phrases in a sentence.

As a substitute of inspecting phrases individually, the transformer mannequin processes a number of phrases concurrently, making it sooner and extra clever.

If you concentrate on how a lot of a breakthrough BERT was for search, you may see that the passion got here from BERT being bidirectional and higher at context.

In language duties, understanding the order of phrases is essential.

The transformer mannequin accounts for this by including particular data known as positional encoding to every phrase’s illustration. It’s like inserting markers on phrases to tell the mannequin about their positions within the sentence.

Throughout coaching, the mannequin compares its translations with appropriate translations. In the event that they don’t align, it refines its settings to strategy the proper outcomes. These are known as “loss features.”

When working with textual content, the mannequin can choose phrases step-by-step. It might both go for the perfect phrase every time (grasping decoding) or contemplate a number of choices (beam search) to seek out the perfect total translation.

In transformers, every layer is able to studying totally different elements of the info.

Sometimes, the decrease layers of the mannequin seize extra syntactic elements of language, equivalent to grammar and phrase order, as a result of they’re nearer to the unique enter textual content.

As you progress as much as larger layers, the mannequin captures extra summary and semantic data, such because the that means of phrases or sentences and their relationships inside the textual content.

This hierarchical studying permits transformers to know each the construction and that means of the language, contributing to their effectiveness in varied NLP duties.

What’s coaching vs. fine-tuning?

Coaching the transformer entails exposing it to quite a few translated sentences and adjusting its inside settings (weights) to supply higher translations. This course of is akin to instructing the mannequin to be a proficient translator by displaying many examples of correct translations.

Throughout coaching, this system compares its translations with appropriate translations, permitting it to appropriate its errors and enhance its efficiency. This step may be thought of a trainer correcting a pupil’s errors to facilitate enchancment.

The distinction between a mannequin’s coaching set and post-deployment studying is important. Initially, fashions be taught patterns, language, and duties from a set coaching set, which is a pre-compiled and vetted dataset.

After deployment, some fashions can proceed to be taught from new information they’re uncovered to, however this isn’t an automated enchancment – it requires cautious administration to make sure the brand new information is useful and never dangerous or biased.

Transformers vs. RNNs

Transformers differ from recurrent neural networks (RNNs) in that they deal with sequences in parallel and use consideration mechanisms to weigh the significance of various elements of the enter information, making them extra environment friendly and efficient for sure duties.

Transformers are presently thought of the perfect in NLP on account of their effectiveness at capturing language context over lengthy sequences, enabling extra correct language understanding and era.

They’re typically seen as higher than an extended short-term reminiscence (LSTM) community (a kind of RNN) as a result of they’re sooner to coach and may deal with longer sequences extra successfully on account of their parallel processing and a spotlight mechanisms.

Transformers are used as an alternative of RNNs for duties the place context and the connection between parts in sequences are paramount.

The parallel processing nature of transformers permits simultaneous computation of consideration for all sequence parts. This reduces coaching time and permits fashions to scale successfully with bigger datasets and mannequin sizes, accommodating the growing availability of information and computational sources.

Transformers have a flexible structure that may be tailored past NLP. Transformers have expanded into laptop imaginative and prescient by means of imaginative and prescient transformers (ViTs), which deal with patches of photos as sequences, much like phrases in a sentence.

This enables ViT to use self-attention mechanisms to seize advanced relationships between totally different elements of a picture, resulting in state-of-the-art efficiency in picture classification duties.

Get the each day publication search entrepreneurs depend on.

Concerning the fashions

BERT

BERT (bidirectional encoder representations from transformers) employs the transformer’s encoder mechanism to know the context round every phrase in a sentence.

In contrast to GPT, BERT seems on the context from each instructions (bidirectionally), which helps it perceive a phrase’s supposed that means based mostly on the phrases that come earlier than and after it.

That is notably helpful for duties the place understanding the context is essential, equivalent to sentiment evaluation or query answering.

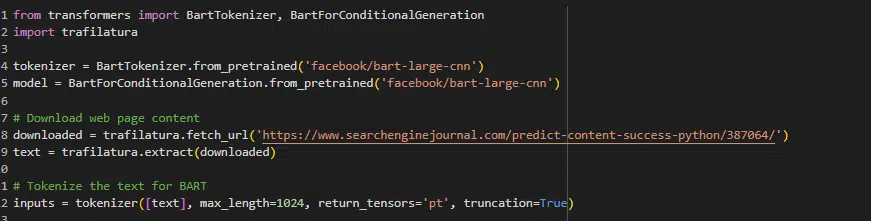

BART

Bidirectional and auto-regressive transformer (BART) combines BERT’s bidirectional encoding functionality and the sequential decoding skill of GPT. It’s notably helpful for duties involving understanding and producing textual content, equivalent to summarization.

BART first corrupts textual content with an arbitrary noising operate after which learns to reconstruct the unique textual content, which helps it to seize the essence of what the textual content is about and generate concise summaries.

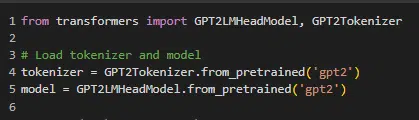

GPT

The generative pre-trained transformers (GPT) mannequin makes use of the transformer’s decoder mechanism to foretell the following phrase in a sequence, making it helpful for producing related textual content.

GPT’s structure permits it to generate not simply believable subsequent phrases however complete passages and paperwork that may be contextually coherent over lengthy stretches of textual content.

This has been the game-changer in machine studying circles, as more moderen large GPT fashions can mimic folks fairly properly.

ChatGPT

ChatGPT, like GPT, is a transformer mannequin particularly designed to deal with conversational contexts. It generates responses in a dialogue format, simulating a human-like dialog based mostly on the enter it receives.

Breaking down transformers: The important thing to environment friendly language processing

When explaining the capabilities of transformer know-how to purchasers, it is essential to set real looking expectations.

Whereas transformers have revolutionized NLP with their skill to know and generate human-like textual content, they aren’t a magic information tree that may change complete departments or execute duties flawlessly, as depicted in idealized situations.

Dig deeper: How counting on LLMs can result in web optimization catastrophe

Transformers like BERT and GPT are highly effective for particular purposes. Nonetheless, their efficiency depends closely on the info high quality they had been skilled on and ongoing fine-tuning.

RAG (retrieval-augmented era) could be a extra dynamic strategy the place the mannequin retrieves data from a database to generate responses as an alternative of static fine-tuning on a set dataset.

However this isn’t the repair for all points with transformers.

Often requested questions

Do fashions like GPT generate subjects? The place does the corpus come from?

Fashions like GPT do not self-generate subjects; they generate textual content based mostly on prompts given to them. They’ll proceed a given subject or change subjects based mostly on the enter they obtain.

In reinforcement studying from human suggestions (RLHF), who supplies the suggestions, and what kind does it take?

In RLHF, the suggestions is offered by human trainers who price or appropriate the mannequin’s outputs. This suggestions shapes the mannequin’s future responses to align extra intently with human expectations.

Can transformers deal with long-range dependencies in textual content, and in that case, how?

Transformers can deal with long-range dependencies in textual content by means of their self-attention mechanism, which permits every place in a sequence to take care of all different positions inside the identical sequence, each previous and future tokens.

In contrast to RNNs or LSTMs, which course of information sequentially and will lose data over lengthy distances, transformers compute consideration scores in parallel throughout all tokens, making them adept at capturing relationships between distant elements of the textual content.

How do transformers handle context from previous and future enter in duties like translation?

In duties like translation, transformers handle context from previous and future enter utilizing an encoder-decoder construction.

- The encoder processes all the enter sequence, making a set of representations that embrace contextual data from all the sequence.

- The decoder then generates the output sequence one token at a time, utilizing each the encoder’s representations and the beforehand generated tokens to tell the context, permitting it to think about data from each instructions.

How does BERT be taught to know the context of phrases inside sentences?

BERT learns to know the context of phrases inside sentences by means of its pre-training on two duties: masked language mannequin (MLM) and subsequent sentence prediction (NSP).

- In MLM, some share of the enter tokens are randomly masked, and the mannequin’s goal is to foretell the unique worth of the masked phrases based mostly on the context offered by the opposite non-masked phrases within the sequence. This activity forces BERT to develop a deep understanding of sentence construction and phrase relationships.

- In NSP, the mannequin is given pairs of sentences and should predict if the second sentence is the following sentence within the authentic doc. This activity teaches BERT to know the connection between consecutive sentences, enhancing contextual consciousness. By way of these pre-training duties, BERT captures the nuances of language, enabling it to know context at each the phrase and sentence ranges.

What are advertising purposes for machine studying and transformers?

- Content material era: They’ll create content material, aiding in content material advertising methods.

- Key phrase evaluation: Transformers may be employed to know the context round key phrases, serving to to optimize net content material for engines like google.

- Sentiment evaluation: Analyzing buyer suggestions and on-line mentions to tell model technique and content material tone.

- Market analysis: Processing giant units of textual content information to determine developments and insights.

- Customized suggestions: Creating personalised content material suggestions for customers on web sites.

Dig deeper: What’s generative AI and the way does it work?

Key takeaways

- Transformers enable for parallelization of sequence processing, which considerably hastens coaching in comparison with RNNs and LSTMs.

- The self-attention mechanism lets the mannequin weigh the significance of every a part of the enter information otherwise, enabling it to seize context extra successfully.

- They’ll handle relationships between phrases or subwords in a sequence, even when they’re far aside, enhancing efficiency on many NLP duties.

Concerned about testing transformers? Right here’s a Google Colab pocket book to get you began.

Opinions expressed on this article are these of the visitor creator and never essentially Search Engine Land. Workers authors are listed right here.